- Technology

At a small local chapter meeting of a professional society, Gordon Moore's talk laid out the rudiments of what would become "Moore's law", which would govern the electronics industry for a half century.

1 Comment Join the conversationWhen you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

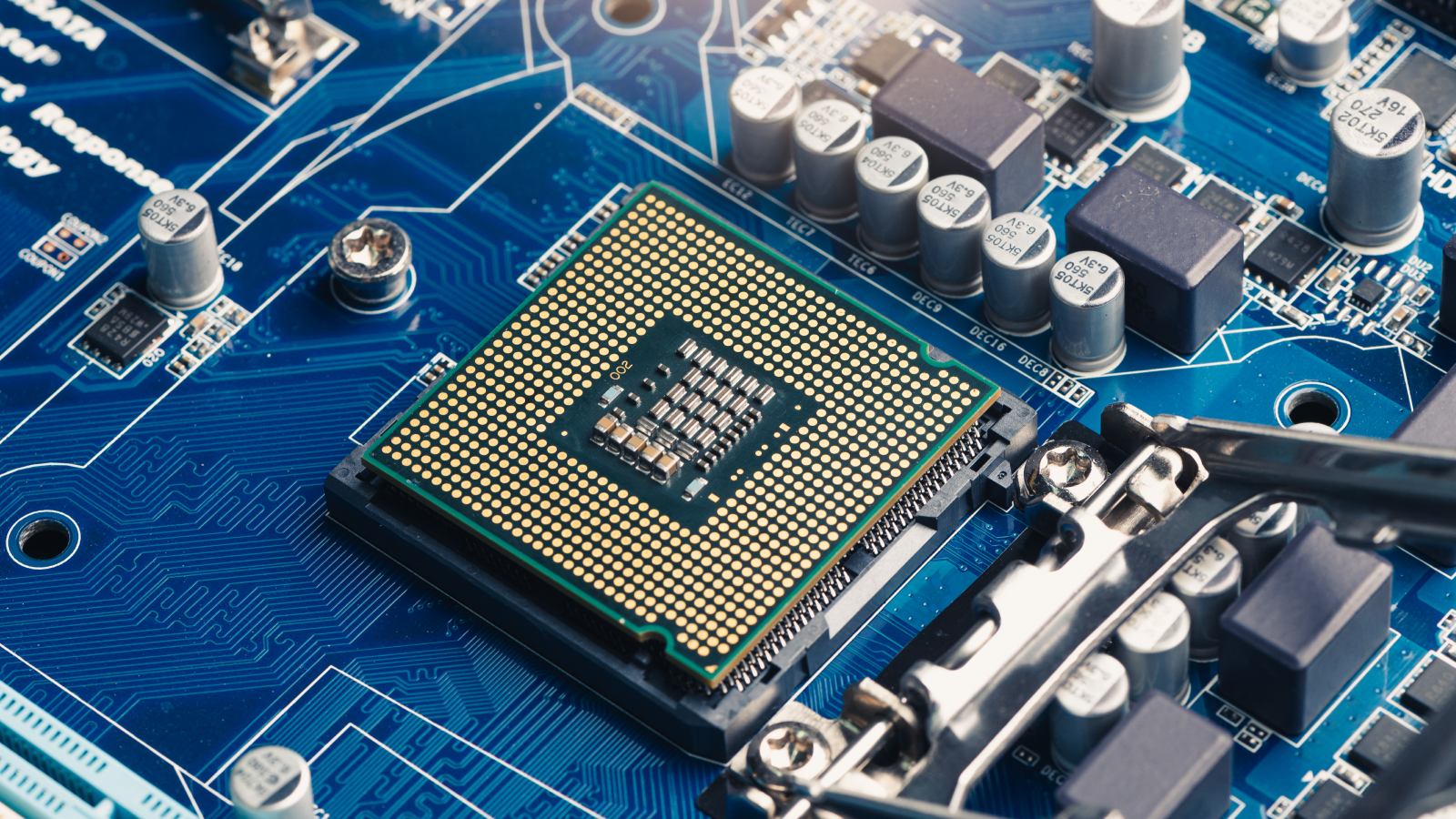

Moore's Law dictates that the number of transistors on an integrated circuit will double every two years. It's guided the technology industry for decades.

(Image credit: Eugene Mymrin/Getty Images)

Moore's Law dictates that the number of transistors on an integrated circuit will double every two years. It's guided the technology industry for decades.

(Image credit: Eugene Mymrin/Getty Images)

Milestone: Moore's law introduced

Date: Dec. 2, 1964

Where: San Francisco Bay Area

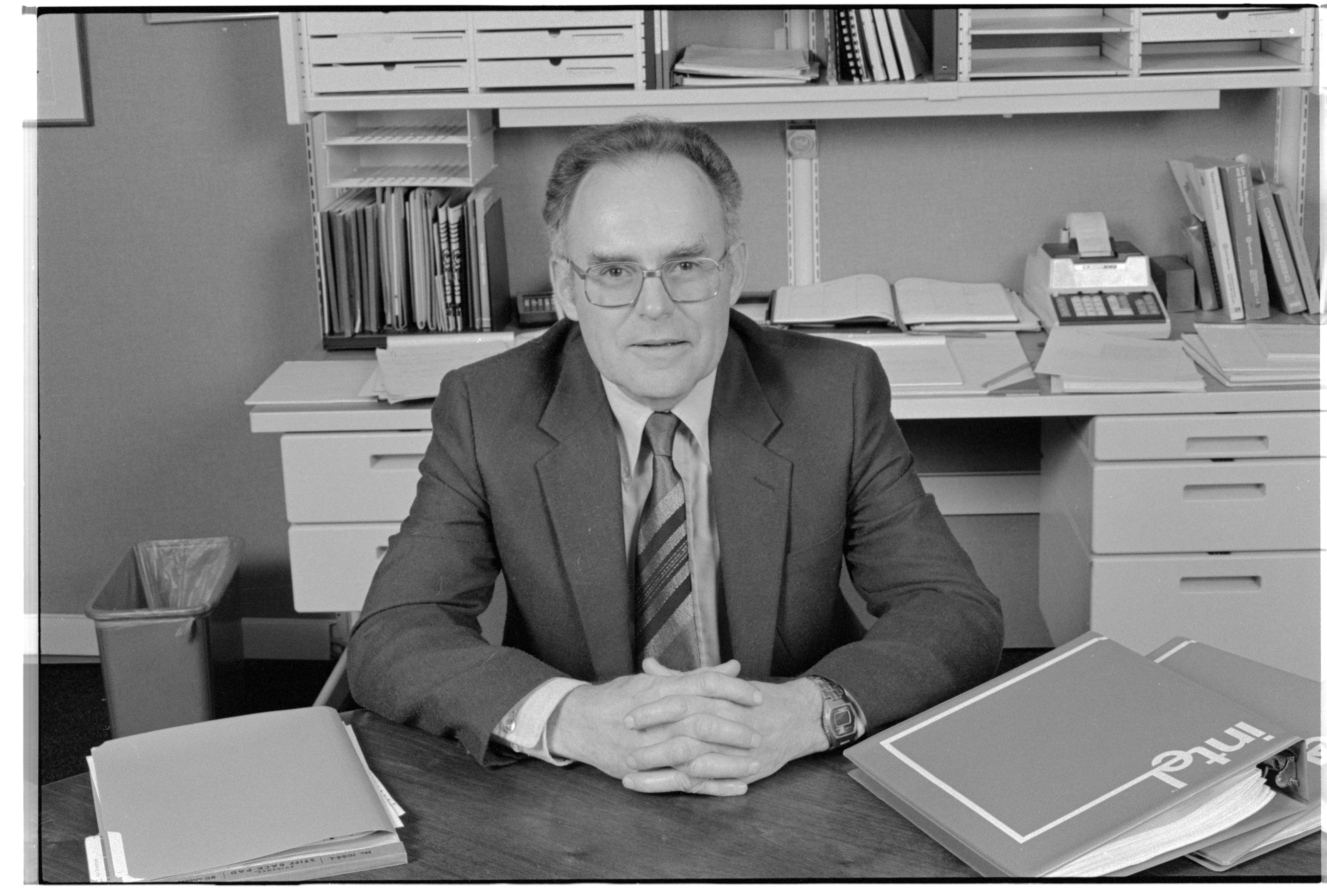

Who: Gordon Moore

At a low-key talk for a local professional society in 1964, computer scientist and chemist Gordon Moore laid out a prediction that would define the world of technology for more than 50 years.

The final version of this prediction would become known as "Moore's law," and it would drive progress in the semiconductor industry for decades.

You may like-

Scientists unveil world's first quantum computer built with regular silicon chips

Scientists unveil world's first quantum computer built with regular silicon chips

-

Science history: First computer-to-computer message lays the foundation for the internet, but it crashes halfway through — Oct. 29, 1969

Science history: First computer-to-computer message lays the foundation for the internet, but it crashes halfway through — Oct. 29, 1969

-

Scientists create world's first microwave-powered computer chip — it's much faster and consumes less power than conventional CPUs

Scientists create world's first microwave-powered computer chip — it's much faster and consumes less power than conventional CPUs

Although it's called a law, it was a prediction based more on economic dictates and industry trends than on the physical laws of nature.

Moore was a director of research and development at Fairchild Semiconductors when he gave the talk, and his goal was ultimately to sell more chips. At the time, computers were gigantic machines that took up a whole room, and integrated circuits, known as microchips, had somewhat limited practical applications.

The silicon transistor, the workhorse that does calculations in a computer, had been invented just a decade earlier, and the integrated circuit, which allowed computers to be miniaturized, had been patented just five years earlier. In 1961, the electronics company RCA had built a 16-transistor chip, and by 1964, General Microelectronics had built a 120-transistor chip.

Moore witnessed this dramatic progress and noticed that a mathematical rule seemed to be governing that progress. This mathematical correlation was later given the name "Moore's law" by other people.

Sign up for the Live Science daily newsletter nowContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Although Moore laid out the principle to The Electrochemical Society in 1964, it gained widespread traction in April of the following year, when he was asked to write an editorial in Electronics magazine. In it, he boldly predicted that as many as 65,000 components could be squeezed onto a single chip — an unheard-of number at the time. It's a charmingly small-potatoes number now, given that in 2024, a company unveiled a 4 trillion-transistor chip.

In 1968, Moore would co-found the chipmaker Intel, where his doubling law would go from a casual observation to a motivation for innovation.

Despite its name, Moore's law was never an ironclad rule. In 1975, Moore downgraded the pace of progress to transistor doubling every two years, rather than every year. That more modest doubling rate would become the official Moore's law, which would hold for years after. This relentless drive toward more computing power and miniaturization is what enables virtually all modern electronics, from the personal computer to the smartphone.

You may like-

Scientists unveil world's first quantum computer built with regular silicon chips

Scientists unveil world's first quantum computer built with regular silicon chips

-

Science history: First computer-to-computer message lays the foundation for the internet, but it crashes halfway through — Oct. 29, 1969

Science history: First computer-to-computer message lays the foundation for the internet, but it crashes halfway through — Oct. 29, 1969

-

Scientists create world's first microwave-powered computer chip — it's much faster and consumes less power than conventional CPUs

Scientists create world's first microwave-powered computer chip — it's much faster and consumes less power than conventional CPUs

For years, people predicted that the law would become outdated, but it proved remarkably resilient for quite some time.

"The fact that we've been able to continue [Moore's law] this long has surprised me more than anything," Moore said in an interview with The Electrochemical Society in 2016. "There always seems to be an impenetrable barrier down the road, but as we get closer to it, people come up with solutions."

However, eventually, the principle would no longer hold. It's not clear exactly when Moore's law became defunct. In its canonical form, the standard likely died in 2016, as it took Intel five years to go from the 14-nanometer-size technology to 10 nanometers. Moore saw this happen, as this was years before he died at the ripe old age of 94 in 2023.

Eventually, Moore's "law" had to peter out because it runs up against the actual laws of physics. As transistors became ever smaller, quantum mechanics, the physics that governs the very small, began to play an outsize role. The world's smallest transistors can face problems with "quantum tunneling," wherein electrons in one tiny transistor can tunnel into another, thereby allowing current to flow in transistors that should be in the "off" position.

As a result, chipmakers are looking at designing chips with new materials and new architecture. The next Moore's law may apply to quantum computers, which harness quantum mechanics as a feature, not a bug, of calculations.

TOPICS On this day in science history Tia GhoseSocial Links NavigationEditor-in-Chief (Premium)

Tia GhoseSocial Links NavigationEditor-in-Chief (Premium)Tia is the editor-in-chief (premium) and was formerly managing editor and senior writer for Live Science. Her work has appeared in Scientific American, Wired.com, Science News and other outlets. She holds a master's degree in bioengineering from the University of Washington, a graduate certificate in science writing from UC Santa Cruz and a bachelor's degree in mechanical engineering from the University of Texas at Austin. Tia was part of a team at the Milwaukee Journal Sentinel that published the Empty Cradles series on preterm births, which won multiple awards, including the 2012 Casey Medal for Meritorious Journalism.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Scientists unveil world's first quantum computer built with regular silicon chips

Scientists unveil world's first quantum computer built with regular silicon chips

Science history: First computer-to-computer message lays the foundation for the internet, but it crashes halfway through — Oct. 29, 1969

Science history: First computer-to-computer message lays the foundation for the internet, but it crashes halfway through — Oct. 29, 1969

Scientists create world's first microwave-powered computer chip — it's much faster and consumes less power than conventional CPUs

Scientists create world's first microwave-powered computer chip — it's much faster and consumes less power than conventional CPUs

New semiconductor could allow classical and quantum computing on the same chip, thanks to superconductivity breakthrough

New semiconductor could allow classical and quantum computing on the same chip, thanks to superconductivity breakthrough

China solves 'century-old problem' with new analog chip that is 1,000 times faster than high-end Nvidia GPUs

China solves 'century-old problem' with new analog chip that is 1,000 times faster than high-end Nvidia GPUs

IBM unveils two new quantum processors — including one that offers a blueprint for fault-tolerant quantum computing by 2029

Latest in Technology

IBM unveils two new quantum processors — including one that offers a blueprint for fault-tolerant quantum computing by 2029

Latest in Technology

New 'physics shortcut' lets laptops tackle quantum problems once reserved for supercomputers and AI

New 'physics shortcut' lets laptops tackle quantum problems once reserved for supercomputers and AI

MIT invention uses ultrasound to shake drinking water out of the air, even in dry regions

MIT invention uses ultrasound to shake drinking water out of the air, even in dry regions

When an AI algorithm is labeled 'female,' people are more likely to exploit it

When an AI algorithm is labeled 'female,' people are more likely to exploit it

For traveling photographers — whether you're chasing dark skies or tracking wildlife, NordVPN is now 74% cheaper

For traveling photographers — whether you're chasing dark skies or tracking wildlife, NordVPN is now 74% cheaper

The 7 best running headphone deals that remain for Cyber Monday

The 7 best running headphone deals that remain for Cyber Monday

Your AI-generated image of a cat riding a banana exists because of children clawing through the dirt for toxic elements. Is it really worth it?

Latest in Features

Your AI-generated image of a cat riding a banana exists because of children clawing through the dirt for toxic elements. Is it really worth it?

Latest in Features

Science history: Female chemist initially barred from research helps helps develop drug for remarkable-but-short-lived recovery in children with leukemia — Dec. 6, 1954

Science history: Female chemist initially barred from research helps helps develop drug for remarkable-but-short-lived recovery in children with leukemia — Dec. 6, 1954

What if Antony and Cleopatra had defeated Octavian?

What if Antony and Cleopatra had defeated Octavian?

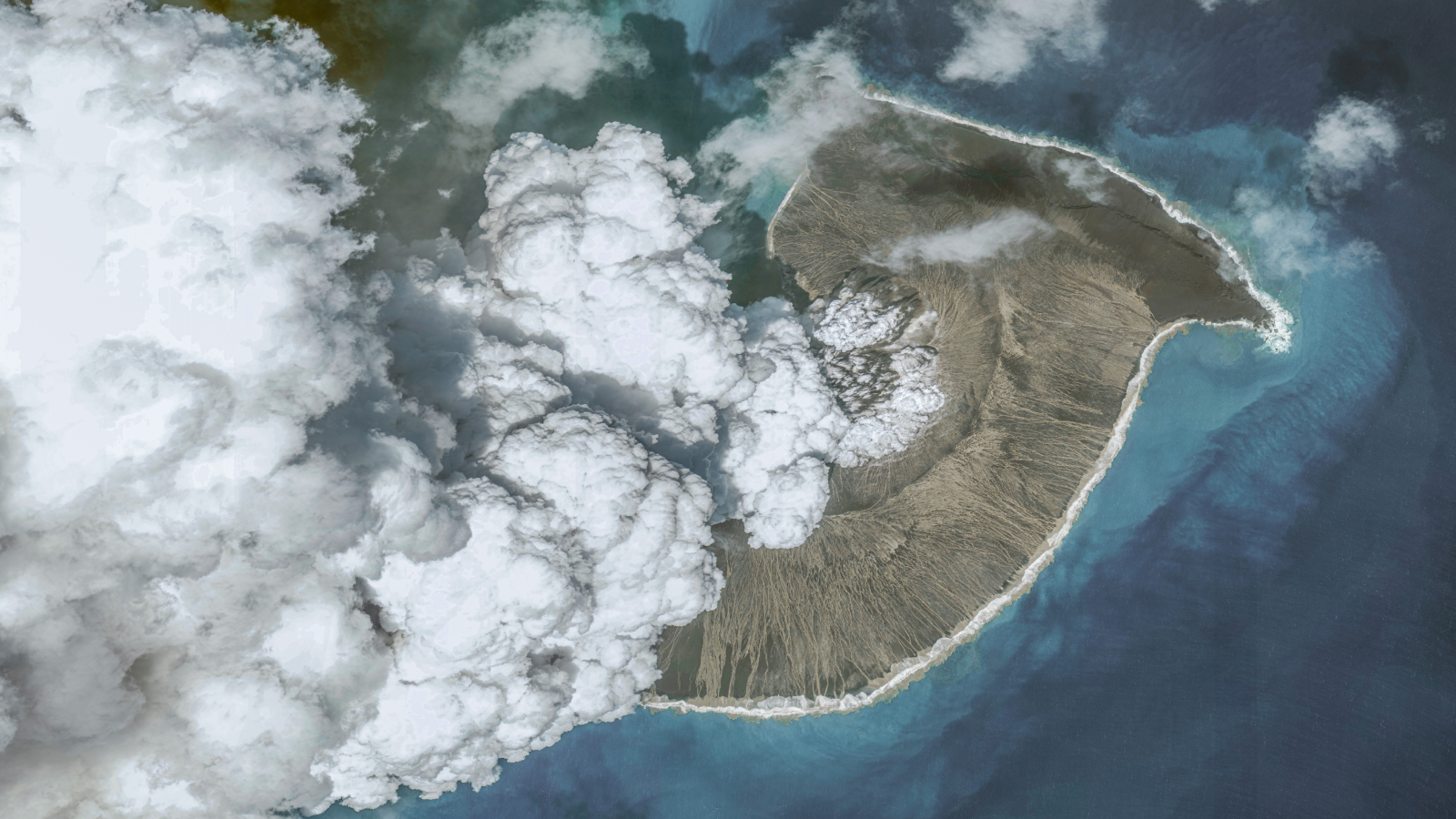

What was the loudest sound ever recorded?

What was the loudest sound ever recorded?

A woman got a rare parasitic lung infection after eating raw frogs

A woman got a rare parasitic lung infection after eating raw frogs

Science history: Computer scientist lays out 'Moore's law,' guiding chip design for a half century — Dec. 2, 1964

Science history: Computer scientist lays out 'Moore's law,' guiding chip design for a half century — Dec. 2, 1964

'Intelligence comes at a price, and for many species, the benefits just aren't worth it': A neuroscientist's take on how human intellect evolved

LATEST ARTICLES

'Intelligence comes at a price, and for many species, the benefits just aren't worth it': A neuroscientist's take on how human intellect evolved

LATEST ARTICLES 1Strangely bleached rocks on Mars hint that the Red Planet was once a tropical oasis

1Strangely bleached rocks on Mars hint that the Red Planet was once a tropical oasis- 22,400-year-old 'sacrificial complex' uncovered in Russia is the richest site of its kind ever discovered

- 3Ethereal structure in the sky rivals 'Pillars of Creation' — Space photo of the week

- 4What was the loudest sound ever recorded?

- 5New 3I/ATLAS images show the comet getting active ahead of close encounter with Earth