Alan Mazzocco/Shutterstock

Alan Mazzocco/Shutterstock

Just a few short years after its release, ChatGPT has become the world's de facto digital multi-tool for everything from a simple Google search to planning an event. Though I'm quite pessimistic about how useful ChatGPT (and its ilk) is, I can't deny that it provides some utility. However, there is a laundry list of things that no one should be using ChatGPT (or any chatbot) for.

Too many people treat ChatGPT like an oracle, not like a multi-modal LLM that is heavily prone to misinformation and hallucinations. They assume it knows everything and can do anything. The unsettling reasons why you should avoid using ChatGPT have been discussed practically to death. It's said some pretty creepy things and has given a lot of people straight-up terrible advice on a number of subjects. Chatbots can provide utility, but that assumes you know what they shouldn't be used for. Here are 14 things you should take to a real person, not to OpenAI.

Anything involving personal information

pakww/Shutterstock

pakww/Shutterstock

If you take only one thing away from this article, let it be this: Your chats with ChatGPT are not private. ChatGPT makes it abundantly clear in its privacy policy that it collects your prompts and uploaded files. Unless you've been living under a rock for the past 20 years, you know tech companies treat privacy policies like pinky promises and often get hacked in the worst data breaches. To be fair to ChatGPT, it doesn't seem to use your data and chat history as unethically as Meta does, but you should still avoid giving the chatbot personal information.

For one, your chats are not always between only you and OpenAI. OpenAI has suffered data breaches and various hiccups that exposed private chatbot chats. You simply never know if the chat you're having (where you've said your name, your age, etc.) could end up on a hacker's PC or on the dark web for bad actors to purchase and wield against you.

Two, chatbots can sometimes regurgitate what they've been trained on, word for word. A shocking study by Cornell University reported that some chatbot models can be coaxed into producing "near-verbatim" copies of their original training data — in this case, a full Harry Potter novel. It stands to reason that any information you give ChatGPT could end up being "memorized" by the chatbot and revealed (intentionally or otherwise) at some later date.

Anything illegal

Brianajackson/Getty Images

Brianajackson/Getty Images

Google searches have been used to convict people many times in the past. ChatGPT can't help you with anything illegal, in theory, but people have discovered how to bypass that quite easily. Disguising unauthorized prompts in poetry, for example, can get you an answer to some pretty shocking stuff. Ethical reasons aside, it's still a bad idea to ask ChatGPT for help with anything illegal.

The first reason should be clear for the same reason you don't share personal info: Your chats are not private and can and will be used against you in a court of law. This has happened already. Even jokingly, you should not ask ChatGPT about anything illegal.

The second reason is that since ChatGPT is deeply prone to hallucination, the information you get (even if you bypass the guardrails) could be wrong — and dangerously so. You can probably imagine at least a couple of illegal activities that could risk your well-being if done incorrectly; imagine getting bad information on how to partake in hallucinogenic drugs. And if neither moral nor self-preservation reasons are enough, then the threat of getting banned from using ChatGPT should be.

Requests to analyze protected information

Sean Gallup/Getty Images

Sean Gallup/Getty Images

Many people regularly use ChatGPT as a sort of summary and analysis tool. You can copy in large amounts of text and have it boil it down, check for errors, and find correlations. We admit there's a lot of potential here for using it in work contexts where you're dealing with a lot of text and just need the SparkNotes version, but if the information you're inputting is proprietary or has protections surrounding how it can be used and shared, then please, please, please don't dump it into ChatGPT.

Hopefully it's now obvious why you shouldn't do this. OpenAI (and sometimes bad actors) has access to your prompts. Imagine you're a healthcare professional working with HIPAA-protected information, and you enter that into ChatGPT. Someone's highly personalized medical information is now at serious risk, even if you had good intentions.

This sort of thing has happened before. Back in 2023, Samsung discovered that some of its employees were putting proprietary information (like source code) into the chatbot (via CS Hub). While it's not clear if this came back to bite Samsung, we've already explained how user-inputted information could be cajoled out of the chatbot. It's best to treat ChatGPT like your town's local gossip; always operate under the assumption that what you say to it could eventually be spilled to everyone else.

Medical advice

TippaPatt/Shutterstock

TippaPatt/Shutterstock

To beat a dead horse that we will continue to beat throughout this article, ChatGPT cannot be trusted 100%. It's often flat-out wrong or makes up convincing-sounding lies — and as many people have discovered, it will agree that it's wrong if you correct it. Even some medical professionals who have tried to find ways to use ChatGPT responsibly admit that it's only good at very generalized answers, not specialized advice for your unique symptoms. But to our chagrin, ChatGPT hasn't been banned from giving medical advice. You can still ask ChatGPT right now, "Hey, what's that sharp pain in my side?" and rather than telling you to rush to an emergency room, it may tell you to just drink water.

Understand that chatbots have no way of discerning truth from fiction. All they're doing is gathering a huge amount of data — regardless of where it comes from or whether it has any truth to it — mashing it up and then feeding you a nice-sounding answer. ChatGPT is not a trained healthcare professional and probably never will be. At best, you can ask it for source-backed answers, but even then you should check those sources carefully. People have literally gotten sick trying to follow chatbot advice.

Relationship counseling

Rgstudio/Getty Images

Rgstudio/Getty Images

It's quite alarming how many people have turned to ChatGPT for relationship advice. ChatGPT is trained on an internet of random information, including the absolute last places you should ever get relationship advice. You run the real risk of a chatbot regurgitating some unhinged nonsense from some trolling Redditor on r/relationshipadvice.

ChatGPT producing bad relationship advice is all too common. Either the advice is just wrong, or it's sycophantically agreeing to your problematic mindsets and delusions, encouraging you when it ought to be calling you out. There is also the fact that it doesn't really do therapy the way therapists do it (i.e., being cautious about giving straight advice), it doesn't understand common practice in counseling (like the proper approach to a partner admitting infidelity), and it can produce different results depending on how you frame your question, among other things (via Psychology Today).

Further, a chatbot has no idea if you're exaggerating your partner's actions and concealing your own, and it can't understand the fuller, deeper context of your life to know what's really going on and what the proper solution might be. It can't pick up non-verbal cues, nor subtle hints that there might be an abusive relationship. There's also the issue that chatbots acquire the cultural biases of their creators, so their relationship advice might work for some people and be outright terrible for others. Regardless of what it's doing, it will never, ever compare to a trained relationship counselor — and you shouldn't treat it as one.

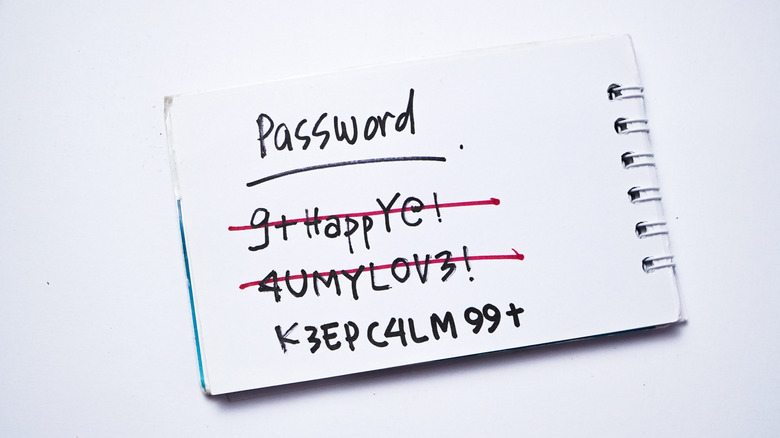

Password generation

Ica-Photo/Shutterstock

Ica-Photo/Shutterstock

It may seem like ChatGPT is fine for the small things, like generating a password. Chatbots are great at making stuff up, so certainly they can make a great, unguessable password, right? They can, but you shouldn't.

Keeper Security gives a few insightful reasons why. For one, there's a good chance it's going to produce the same password for other people asking the same question. Second, unless you give it further guidance, the password recommendations may not be strong enough. I've also personally noticed how chatbots fail miserably at counting, too; ask ChatGPT to produce a 20-character password, for example, and it'll spit out a 17-character one. Last but not least — say it with us — ChatGPT saves your prompts and its own outputs to improve its models and can regurgitate information verbatim.

What should you use instead? Try a password generator. Most major password managers bundle one into their apps for free. You can find password generators online that do a great job of this, such as Bitwarden's free password generator tool.

Therapy

Choreograph (konstantin Yuganov)/Getty Images

Choreograph (konstantin Yuganov)/Getty Images

Therapy is one of those things that virtually everyone needs, but few can really afford. Even the "cheap" online therapy services can easily cost you $65 a session out of pocket, and very few insurance plans cover mental health. It's hard to blame someone who desperately needs to talk it out for resorting to a chatbot. Nonetheless, this is a bad idea for many of the same reasons we've already outlined.

Once again, the biggest issue here is ChatGPT's tendency toward sycophancy. It's programmed to fawn and praise you more than it holds you to task, which in a therapeutic environment means it may cheer you on with things that are objectively harmful to you. The issue with treating mental health is that people need an external, objective, professional voice that won't accidentally reinforce delusions. Anyone who has suffered from mental health issues knows very well that it's easy to seek validation on things that absolutely should not be validated.

As evidence of this, there is an ongoing lawsuit where ChatGPT is alleged to have driven a teen to suicide. The evidence is pretty damning, but OpenAI denies wrongdoing. Imagine having a loved one whose mental health worsens because they turned to ChatGPT, and then having ChatGPT refuse to take responsibility. And even those in favor of using ChatGPT for therapy admit it has some serious limitations.

Repair help

PeopleImages/Shutterstock

PeopleImages/Shutterstock

Unless there's an in-depth repair manual, fixing a car or device condemns you to hours spent trawling through online forums until you find that one person who had the same issue as you and got help. It seems the logical solution is to use a chatbot; it's been trained on an entire internet, after all, and may regurgitate the particular solution you need. As tantalizing as that may sound, we strongly recommend against using ChatGPT to help with repairs of any kind.

As proof of why, we point you to the many people who have tried to use ChatGPT to fix their car. The results are unsurprising. ChatGPT might get the broad strokes right, as it usually does, but with the specific details, not so much. And that's to say nothing of when it gives false or hallucinated information. A lot of experts (the people who'd know best when it's wrong) have put it to the test and been unimpressed.

This isn't to say that it's a complete lost cause. Even plumbers use ChatGPT nowadays, to some success. But when you're dealing with a (likely expensive) car or device that could be ruined with the wrong fix, just take it to a professional.

Finance advice

Ramzan Creation/Shutterstock

Ramzan Creation/Shutterstock

You know exactly what we're going to say: You should think twice about asking ChatGPT for financial advice. Again — and we'll say this till we're blue in the face — ChatGPT can give false or completely fabricated information based on problematic training data. Finance, generally speaking, is a complicated subject. We're talking about something that encompasses stocks, retirement accounts, real estate, and varies from place to place and culture to culture. Investment portfolio managers, accountants, CPAs — you name it — are all distinct careers that require decades of experience to do well. It's ludicrous to think a chatbot can be a jack-of-all-trades when it comes to anything money-related.

As we made clear, certain prompts may be acceptable. Asking for generalized information on how to save money or cut expenses — when taking ChatGPT's words with a grain of salt — is probably okay. At the same time, turn to professionals when you should. Don't ask ChatGPT for tax help, to give one of many examples. Saying ChatGPT is the reason you committed tax fraud is probably not going to hold up in court.

Help with homework

BongkarnGraphic/Shutterstock

BongkarnGraphic/Shutterstock

For millennia, institutions of learning have been built on having students learn how to acquire and analyze information, how to problem solve, and how to do all that under deadlines and stress — all key to making intelligent, adaptive people. Now that may be a thing of the past. ChatGPT is going to have a profound impact on education, which will hit larger society broadside as ChatGPT-educated students enter the workforce. Rather than stem the tide of AI and education, major institutions — like Harvard — are welcoming it with open arms. That ain't good.

To be fair, it's too early to say how damaging ChatGPT-ized education will be, but it will be damaging. ChatGPT has been out in the wild for a few years now, and already studies are showing that frequent users cognitively underperform. Even if we're not talking about a person's academic performance, there's also the risk of developing a chatbot dependency. Imagine how difficult it would be for a student who's used ChatGPT every day for four years to then do something without it — like building the house you'll live in. Long story short, learn the "old-fashioned" way. You'll be a cut above your ChatGPT-leaning peers.

Drafting legal documents

PeopleImages/Shutterstock

PeopleImages/Shutterstock

You'd think this would be self-explanatory. Lawyers have to go to school for years and then pass an incredibly difficult bar exam before they can practice law. Major legal battles can take years. How could a chatbot hope to compare? Yet already lawyers have tried to make ChatGPT do the hard work for them — to disastrous results. Even back in 2023 when ChatGPT was new, we had lawyers generating legal briefs that (shocker) completely made up citations (via Reuters).

Once again, we repeat that this is not us suggesting that ChatGPT has no place here whatsoever, but the place it has is limited. Even Harvard Law School, in an attempt to be open-minded and generous to ChatGPT, admits to considerable limitations. It's not hard to see why this is the case. Laws vary from place to place, from industry to industry, and there are hundreds of years of evolving legal precedent to factor in. So the last thing you want to do is have ChatGPT write up an apartment contract for your new tenant, only for them to ruin the place because the contract didn't stop them.

Future predictions

Shutter_m/Getty Images

Shutter_m/Getty Images

Not even the top experts in most fields can predict the future, so why would ChatGPT somehow do that better? We have belabored the point again and again that chatbots are highly prone to getting things wrong. If they can't get the facts straight about today, it's hard to imagine them being accurate about what's going to happen tomorrow.

This is a very broad word of warning. If you're using ChatGPT to decide which horse to bet on, as it were, think again. Don't ask it which stocks to buy, which team will win, or which industry you should dedicate your education track to in the hopes it'll go gangbusters — random examples, but you get the point. You can ask it where you think humanity will be in 200 years out of gentle curiosity, taking nothing seriously, but that's about the extent of what we'd recommend.

Advice in an emergency

Hispanolistic/Getty Images

Hispanolistic/Getty Images

Time is of the essence when someone's life is on the line. Whether you've been in a crash or there's a fire, many might be tempted in that situation to ask ChatGPT what to do. You see where this is going. The advice ChatGPT gives you could be wrong, and since this is an emergency we're talking about, wrong information could lead to danger, injury, and/or death.

We'd recommend being prepared for emergencies before they happen rather than asking ChatGPT in a pinch. You lose nothing taking five minutes to learn CPR or the Heimlich maneuver, or participating in a fire drill. Prepare yourself with equipment, too. Get one of these five gadgets that can save your life in various emergencies. And of course, if you have cell reception, call 911 in the event of an emergency rather than leaving things to chance. Turn to professionals rather than chatbots, because frankly, this is where chatbots can be relied upon least.

Anything political

Cemile Bingol/Getty Images

Cemile Bingol/Getty Images

Chatbots are not blank slates capable of dispassionate objectivity. They're more like sponges, acquiring the paradigms of their creators and their training data. The problem with politics is that it's a mostly subjective affair that has more to do with what people value than what is true. As such, ChatGPT is highly prone to partisanship.

Which side ChatGPT is sympathetic to always changes. Once people complained that ChatGPT was unfairly left-leaning, but more recently, they're saying the political seesaw is actually tipping in the opposite direction. We're not here to comment on whether the left or the right is better, but rather that ChatGPT is not the source to go to for your politics. Either it's going to sycophantically validate whatever it is you already believe — not good if you've been indoctrinated with a problematic ideology — or it's going to possibly feed you loaded, biased, incorrect information. After all, ChatGPT has been trained just as much on thoughtful political discussions by intelligent, educated people as it has been on racist and sexist screeds by random wacko internet psychos.